The ELK Stack is a potent combination of three open-source tools: Elasticsearch, Logstash, and Kibana. Each component plays a vital role in the data analysis process. Elasticsearch is a distributed, RESTful search and analytics engine designed for horizontal scalability, reliability, and real-time search and analytics.

It enables fast and efficient data retrieval and analysis. Logstash is a data processing pipeline that ingests data from multiple sources, transforms it, and then sends it to a “stash” like Elasticsearch. This process enables the integration of data from diverse sources into a single platform.

Kibana, on the other hand, is a data visualization and exploration tool that allows users to interact with data stored in Elasticsearch. It provides a user-friendly interface for exploring, visualizing, and analyzing data. The ELK Stack is widely utilized for log analysis, real-time application monitoring, and operational intelligence.

Its capabilities enable it to handle large volumes of data and provide real-time insights into the data. By understanding the components of ELK Stack, organizations can leverage its capabilities to gain valuable insights from their data and make informed decisions. This powerful combination of tools enables organizations to unlock the full potential of their data and drive business success.

Key Takeaways

- ELK Stack is a powerful tool for data analysis, consisting of Elasticsearch, Logstash, and Kibana.

- Understanding the components of ELK Stack is crucial for harnessing its full potential for data analysis.

- Setting up ELK Stack involves installing and configuring Elasticsearch, Logstash, and Kibana to work together seamlessly.

- Optimizing data ingestion with ELK Stack involves efficient handling and processing of large volumes of data.

- Utilizing Kibana for data visualization and exploration, and leveraging Elasticsearch for powerful data querying are key aspects of harnessing the full potential of ELK Stack for data analysis.

Setting Up ELK Stack for Data Analysis

Configuring Elasticsearch

The first step is to install and configure Elasticsearch, which involves setting up a cluster, defining index mappings, and configuring data retention policies.

Configuring Logstash

Next, Logstash needs to be installed and configured to ingest data from various sources, perform data transformations, and send it to Elasticsearch.

Configuring Kibana and Ensuring Optimal Performance

Finally, Kibana needs to be installed and configured to visualize and explore the data stored in Elasticsearch. It is important to ensure that the hardware and infrastructure are capable of handling the data volume and processing requirements of ELK Stack. Proper resource allocation and tuning are essential for optimal performance.

Implementing Security Measures

Additionally, security measures such as access control, encryption, and monitoring should be implemented to protect the data and infrastructure. Setting up ELK Stack for data analysis requires careful planning and attention to detail to ensure a smooth and efficient operation.

Optimizing Data Ingestion with ELK Stack

Optimizing data ingestion with ELK Stack is crucial for efficient data analysis. Logstash provides a wide range of input plugins for ingesting data from various sources such as log files, databases, message queues, and more. It is important to choose the right input plugins based on the data sources and formats to ensure smooth data ingestion.

Additionally, Logstash filters can be used to parse, enrich, and transform the data before sending it to Elasticsearch. It is also important to consider the scalability and fault tolerance of the data ingestion pipeline. Logstash provides features such as load balancing, retry mechanisms, and monitoring capabilities to ensure reliable data ingestion.

By optimizing data ingestion with ELK Stack, organizations can ensure that their data analysis process is efficient and reliable.

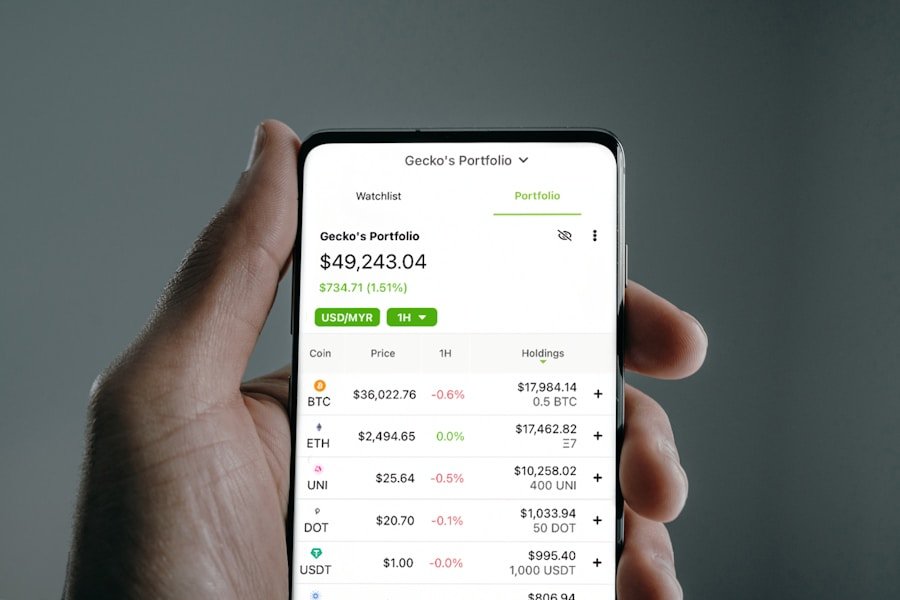

Utilizing Kibana for Data Visualization and Exploration

| ELK Stack Component | Potential for Data Analysis |

|---|---|

| Elasticsearch | Powerful full-text search, real-time analytics, and distributed data analysis capabilities |

| Logstash | Data collection, parsing, and enrichment for structured and unstructured data |

| Kibana | Visualization and exploration of data through interactive dashboards and charts |

| Beats | Data shippers for sending various types of data to Elasticsearch |

| Machine Learning | Advanced analytics and anomaly detection for predictive data analysis |

Kibana is a powerful tool for visualizing and exploring data stored in Elasticsearch. It provides a wide range of visualization options such as line charts, bar charts, pie charts, heat maps, and more. Users can create dashboards to monitor key metrics and trends in real-time.

Kibana also provides advanced features such as time series analysis, geospatial visualization, and machine learning integration for predictive analytics. In addition to visualization, Kibana provides powerful search and filtering capabilities for exploring the data. Users can create complex queries using the Elasticsearch Query DSL to retrieve specific data sets for analysis.

Kibana also provides features such as saved searches, index patterns, and field formatters for customizing the data exploration experience. By utilizing Kibana for data visualization and exploration, organizations can gain valuable insights from their data and make informed decisions.

Leveraging Elasticsearch for Powerful Data Querying

Elasticsearch is a distributed search and analytics engine that provides powerful querying capabilities for analyzing large volumes of data. It supports full-text search, aggregations, filtering, sorting, and more. Users can create complex queries using the Elasticsearch Query DSL to retrieve specific data sets based on various criteria such as time range, keywords, and field values.

Elasticsearch also provides features such as relevance scoring, highlighting, and suggestions for improving search results. In addition to querying, Elasticsearch provides powerful analytics capabilities such as aggregations for summarizing and analyzing the data. Users can create histograms, date histograms, terms aggregations, and more to gain insights into the data distribution and patterns.

By leveraging Elasticsearch for powerful data querying, organizations can perform advanced analytics on their data and uncover valuable insights.

Enhancing Data Analysis with Logstash Pipelines

Logstash provides a flexible and powerful pipeline architecture for enhancing data analysis. It allows users to define complex data processing workflows using input plugins, filters, and output plugins. Input plugins can be used to ingest data from various sources such as log files, databases, message queues, and more.

Filters can be used to parse, enrich, and transform the data before sending it to output plugins such as Elasticsearch. Logstash pipelines can be customized to meet specific data processing requirements such as data enrichment, normalization, anonymization, and more. Users can define conditional processing logic based on various criteria such as field values, patterns, and timestamps.

By enhancing data analysis with Logstash pipelines, organizations can ensure that their data is processed efficiently and accurately.

Best Practices for Harnessing the Full Potential of ELK Stack

Harnessing the full potential of ELK Stack for data analysis requires following best practices in various areas such as architecture design, performance tuning, security implementation, and more. It is important to design a scalable and fault-tolerant architecture that can handle the data volume and processing requirements. Proper resource allocation and tuning are essential for optimal performance.

Security measures such as access control, encryption, and monitoring should be implemented to protect the data and infrastructure. It is also important to monitor the health and performance of the ELK Stack components using tools such as monitoring plugins, metrics collection agents, and log analysis tools. By following best practices for harnessing the full potential of ELK Stack, organizations can ensure that their data analysis process is efficient, reliable, and secure.

In conclusion, ELK Stack is a powerful tool for data analysis that provides a wide range of capabilities for ingesting, processing, storing, visualizing, and analyzing large volumes of data. By understanding the components of ELK Stack and following best practices for setting up and optimizing the environment, organizations can harness its full potential for gaining valuable insights from their data. Whether it’s log analysis, real-time application monitoring, or operational intelligence, ELK Stack provides the tools needed to make informed decisions based on data-driven insights.

FAQs

What is ELK Stack?

ELK Stack is a combination of three open-source tools: Elasticsearch, Logstash, and Kibana. It is used for log management and data analysis.

What is the full potential of ELK Stack for data analysis?

The full potential of ELK Stack for data analysis lies in its ability to collect, store, and analyze large volumes of data from various sources in real-time. It provides powerful search and visualization capabilities for data analysis.

How can ELK Stack be harnessed for data analysis?

ELK Stack can be harnessed for data analysis by setting up data pipelines using Logstash to collect and process data, storing the data in Elasticsearch for indexing and searching, and using Kibana for visualization and analysis.

What are the benefits of using ELK Stack for data analysis?

Some benefits of using ELK Stack for data analysis include real-time data processing, scalability for handling large volumes of data, powerful search and visualization capabilities, and the ability to analyze data from diverse sources.

What are some use cases for ELK Stack in data analysis?

ELK Stack can be used for various data analysis use cases such as log analysis, security information and event management (SIEM), application performance monitoring, business intelligence, and operational analytics.