Data exfiltration, the unauthorized transfer of data from a computer or network, represents a significant threat to organizational security. Traditional data loss prevention (DLP) systems often rely on predefined rules and signatures, which can be circumvented by novel or obfuscated attack methods. This article explores the application of artificial intelligence (AI), specifically pattern-learning models, to enhance the detection and blocking of data exfiltration attempts.

The Evolving Landscape of Data Exfiltration

Data exfiltration techniques are becoming increasingly sophisticated, moving beyond simple bulk transfers. Attackers now employ diverse methods to steal sensitive information.

Stealthy Exfiltration Vectors

Sophisticated attackers often leverage less obvious channels, blending malicious traffic with legitimate network activity. This can include:

- Covert channels: Utilizing seemingly innocuous protocols or traffic patterns to embed stolen data. Examples include DNS tunneling, ICMP tunneling, or steganography within legitimate files. Imagine a data stream as a river. A covert channel is like a tiny, almost invisible stream branching off, carrying precious cargo unnoticed by the main river’s watchers.

- Encrypted traffic: Encrypting exfiltrated data makes it difficult for traditional DLP systems to inspect content. While encryption is essential for privacy, it also creates a blind spot for security tools that only inspect unencrypted payloads.

- Cloud services and legitimate applications: Abusing legitimate cloud storage, collaboration platforms, or social media to transfer data. This bypasses perimeter defenses that might block direct connections to unknown external servers.

- Low-and-slow exfiltration: Transferring small amounts of data over extended periods to avoid triggering volume-based alerts. This is akin to siphoning off a bucket of water from a large lake, one drip at a time, making it hard to notice a significant change.

Limitations of Traditional DLP

Rule-based DLP systems, while effective against known threats, struggle with adaptive adversaries. Their primary limitations include:

- Signature-dependence: They rely on predefined patterns or content signatures, making them vulnerable to zero-day exfiltration attacks or variations of known methods.

- High false positives/negatives: Overly aggressive rules can generate numerous false positives, desensitizing security teams. Conversely, too lenient rules can lead to missed exfiltrations.

- Static nature: Updates require manual intervention and cannot adapt to evolving attack methodologies in real-time.

- Lack of contextual understanding: Traditional DLP systems often lack the ability to understand the context of data movement, such as whether a specific transfer is legitimate based on a user’s role or typical behavior.

AI as an Enabler: Pattern-Learning Models

AI-driven pattern-learning models offer a dynamic approach to identifying anomalies indicative of data exfiltration. Unlike static rules, these models learn what constitutes normal behavior and flag deviations.

Machine Learning Fundamentals

Machine learning, a subset of AI, enables systems to learn from data without explicit programming. For data exfiltration, this involves training models on large datasets of both legitimate and malicious network traffic and user activities.

- Supervised learning: This involves training models with labeled datasets, where data points are already classified as “normal” or “exfiltration.” The model learns to map input features (e.g., source IP, destination IP, data volume, protocol) to these labels.

- Unsupervised learning: This approach is used when labeled data is scarce. The model identifies inherent structures or clusters in the data without prior knowledge of classes. It excels at discovering anomalies or outliers that deviate significantly from learned normal patterns. Think of it as a shepherd who knows how sheep typically behave and immediately spots a wolf in sheep’s clothing, even if they haven’t seen that specific wolf before.

- Reinforcement learning: While less common for direct exfiltration detection, reinforcement learning could be used to optimize autonomous blocking actions, where an agent learns through trial and error to maximize rewards (e.g., successful blocks) and minimize penalties (e.g., legitimate traffic disruption).

Types of Pattern-Learning Models

Various machine learning algorithms are applicable to data exfiltration detection. The choice of model depends on the specific data characteristics and detection objectives.

- Deep neural networks (DNNs): Multilayered neural networks capable of learning complex hierarchical features from raw data. They are effective for analyzing diverse data types, including network packet headers, process activity, and user behavior. Their ability to process high-dimensional data makes them ideal for intricate threat detection.

- Support vector machines (SVMs): Algorithms that find an optimal hyperplane to separate different classes of data. Effective for binary classification (normal vs. exfiltration) and can handle high-dimensional spaces.

- Isolation forests: An anomaly detection algorithm that works by isolating anomalies rather than profiling normal behavior. It is efficient and performs well with large datasets and can identify outliers quickly.

- Recurrent neural networks (RNNs) and Long Short-Term Memory (LSTM) networks: Particularly suited for analyzing sequential data, such as network traffic flows over time or user activity chronologies. They can detect subtle temporal patterns that precede or constitute exfiltration attempts.

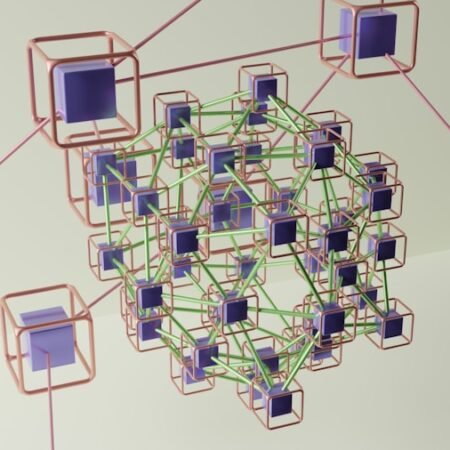

Architecting an AI-Powered Detection System

Implementing an effective AI-driven detection system requires careful consideration of data collection, model training, and integration into existing security infrastructure.

Data Ingestion and Feature Engineering

The quality and relevance of input data directly impact model performance. Comprehensive data collection from various sources is crucial.

- Network flow data (NetFlow/IPFIX): Provides insights into communication patterns, source/destination IPs, ports, and data volumes. This is the scaffolding upon which models build an understanding of network behavior.

- DNS logs: Reveal domain resolutions and can expose command-and-control (C2) communication or data exfiltration via DNS tunneling.

- Proxy logs: Detail web browsing activity, including URLs visited and data transferred, helping identify suspicious web-based exfiltration.

- Endpoint telemetry: Provides information on process execution, file access, registry modifications, and user activity on individual devices. This offers a granular view of potential data access and transfer.

- DLP alerts and security event logs (SIEM): Existing security alerts can be valuable as labeled data for supervised learning, helping the AI learn from historical incidents.

Feature engineering involves transforming raw data into meaningful features that the models can understand. This includes creating features like data transfer rates, protocol usage frequencies, destination reputation scores, and behavioral baselines for users or hosts.

Model Training and Validation

Training machine learning models is an iterative process involving data preparation, model selection, and hyperparameter tuning.

- Baseline establishment: Initially, models learn what “normal” data movement and user behavior look like within an organization. This baseline is continuously updated.

- Anomaly detection: Once a baseline is established, the models continuously monitor incoming data for deviations that exceed predefined thresholds or statistical norms.

- Feedback loops and retraining: It is crucial to incorporate human feedback (e.g., security analyst validation of alerts) to refine the models and reduce false positives. Regularly retraining models with new data helps them adapt to evolving environments and threat landscapes.

Real-Time Blocking and Response Mechanisms

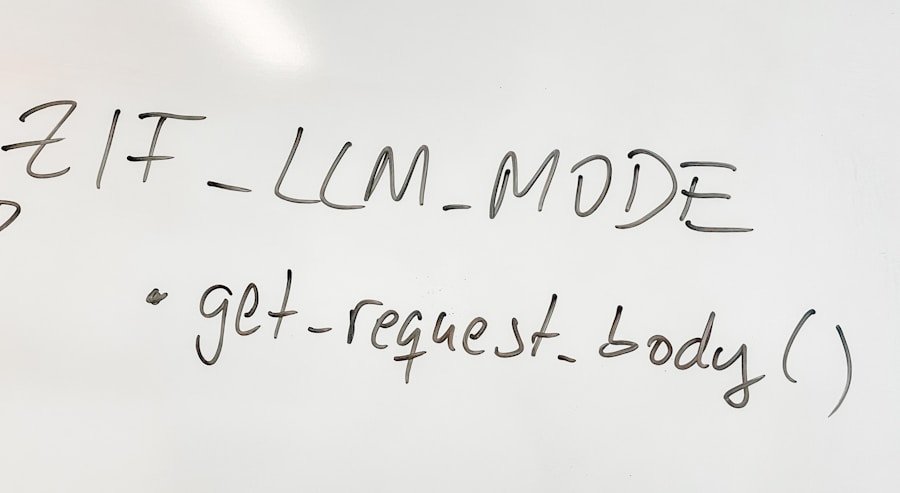

Detection alone is insufficient; an effective system must be capable of responding to identified threats in real-time.

Automated Remediation Actions

Upon detecting a high-confidence exfiltration attempt, the system should trigger automated responses to mitigate the threat.

- Network segmentation/isolation: Automatically quarantining affected endpoints or segmenting compromised network segments to prevent further data egress.

- Blocking network connections: Dynamically updating firewall rules or proxy configurations to block suspicious outbound connections. This is like closing a floodgate before a dam bursts.

- Process termination: For endpoint-based exfiltration, terminating suspicious processes identified as responsible for data transfer.

- User account lockout/suspension: If data exfiltration is linked to a compromised or malicious user account, immediate account suspension can prevent further damage.

Integration with Security Orchestration, Automation, and Response (SOAR)

Integrating AI-driven detection with SOAR platforms enhances the overall security posture.

- Automated playbook execution: Upon alert generation, SOAR platforms can trigger predefined playbooks, automating response actions and reducing manual effort.

- Contextual enrichment: SOAR can enrich AI-generated alerts with additional threat intelligence, vulnerability data, and user context, providing security analysts with a comprehensive view.

- Incident management: Centralizing incident response workflows, ensuring timely investigation and resolution of exfiltration incidents.

Challenges and Future Outlook

While AI offers significant advantages, implementing these systems is not without its challenges.

Data Privacy and Ethical Considerations

The extensive collection and analysis of data raise concerns about privacy, especially with personal identifiable information (PII).

- Anonymization and pseudonymization: Employing techniques to remove or obfuscate sensitive data fields during training and analysis.

- Data governance: Establishing clear policies for data collection, retention, and access to ensure compliance with regulations like GDPR and CCPA.

- Bias in models: Ensuring that training data is representative and does not introduce biases that could lead to unfair or inaccurate detection for certain user groups or data types.

Computational Resources and Expertise

Implementing and maintaining AI systems requires significant resources and specialized skills.

- High computational costs: Training and deploying complex deep learning models can be computationally intensive, requiring substantial hardware and infrastructure investments.

- Scarcity of AI talent: The demand for skilled data scientists and AI engineers with cybersecurity expertise often exceeds supply.

- Model explainability: Understanding why an AI model made a particular decision can be challenging, especially with complex neural networks. This “black box” problem can hinder incident investigation and trust in the system.

The Adversarial AI Landscape

Adversaries are also advancing, and they may attempt to manipulate AI models designed for detection.

- Adversarial examples: Crafting subtly altered input data that causes the AI model to misclassify an exfiltration attempt as legitimate traffic, or vice-versa.

- Model poisoning: Injecting malicious data into training datasets to subtly corrupt the model’s learning process over time. This is like a saboteur subtly contaminating the water supply of a city, slowly making it unhealthy.

- Evolving attack patterns: Attackers will continuously adapt their methods, requiring AI models to be constantly updated and retrained to remain effective.

Moving forward, advancements in explainable Artificial Intelligence (XAI) will be crucial for building trust and enabling security analysts to understand the rationale behind AI-driven alerts. Federated learning could allow models to be trained across multiple organizations without sharing raw sensitive data, addressing privacy concerns. The continuous arms race between attackers and defenders necessitates a proactive and adaptive approach, with AI serving as a critical ally in securing sensitive data.

FAQs

What is automated data exfiltration detection and blocking?

Automated data exfiltration detection and blocking refers to the use of artificial intelligence (AI) and pattern-learning models to automatically identify and prevent unauthorized attempts to transfer sensitive data outside of an organization’s network.

How do pattern-learning models work in automated data exfiltration detection?

Pattern-learning models analyze network traffic and user behavior to identify patterns associated with data exfiltration. These models use machine learning algorithms to continuously learn and adapt to new exfiltration techniques, enabling them to detect and block suspicious activities in real time.

What are the benefits of using AI for data exfiltration detection and blocking?

Using AI for data exfiltration detection and blocking offers several benefits, including improved accuracy in identifying potential threats, reduced false positives, and the ability to detect sophisticated exfiltration techniques that may go unnoticed by traditional security measures.

How does automated data exfiltration detection help organizations improve their cybersecurity posture?

Automated data exfiltration detection helps organizations improve their cybersecurity posture by providing proactive and real-time protection against data breaches. By quickly identifying and blocking unauthorized data transfers, organizations can mitigate the risk of sensitive information being compromised.

What are some considerations for implementing automated data exfiltration detection and blocking in an organization?

When implementing automated data exfiltration detection and blocking, organizations should consider factors such as the scalability of the solution, integration with existing security infrastructure, and the need for ongoing monitoring and maintenance to ensure the effectiveness of the AI-powered detection and blocking capabilities.