Accessing artificial intelligence (AI) models through application programming interfaces (APIs) has become a common practice, enabling developers to integrate powerful AI capabilities into their own applications. However, this accessibility also introduces significant security considerations. Just as a fortified castle needs a strong gate and vigilant guards to protect its treasures, AI model APIs require robust access control and security measures to prevent unauthorized use, data breaches, and model manipulation. This article outlines best practices for safeguarding your AI model APIs, ensuring that access is granted responsibly and your models remain secure.

Understanding the Landscape of AI Model API Security

The increasing reliance on AI models necessitates a proactive approach to their security. Mismanagement of API access can expose sensitive data, allow for the exfiltration of proprietary algorithms, and lead to the misuse of AI for malicious purposes. Unlike static software, AI models can be dynamic, learning and adapting, which adds another layer of complexity to security.

The Vulnerability of Exposed AI Capabilities

When an AI model is exposed via an API, it becomes a potential target. Attackers might seek to:

- Gain unauthorized access: To use the model for unintended or malicious purposes, such as generating spam, creating deepfakes, or performing illegal activities.

- Extract sensitive data: If the model was trained on private or confidential information, attackers might try to probe the API to infer or directly steal this data.

- Manipulate model behavior: Adversarial attacks can be launched through the API to alter the model’s predictions or classifications, potentially leading to significant consequences, especially in domains like healthcare or finance.

- Exhaust resources: Denial-of-service (DoS) attacks can overwhelm the API, making it unavailable to legitimate users and incurring significant operational costs.

- Steal intellectual property: Detailed examination of API responses can sometimes reveal aspects of the underlying model architecture or training data, leading to IP theft.

The Importance of a Multi-Layered Defense

No single security measure is sufficient. A comprehensive strategy employs multiple layers of defense, much like an onion, where each layer contributes to the overall protection. From the initial authentication of a user to the monitoring of API calls, each stage presents an opportunity to reinforce security.

Implementing Robust Access Control Mechanisms

Access control is the cornerstone of API security. It dictates who can access the API, what actions they can perform, and under what conditions. Without proper controls, your API becomes an open door.

Authentication: Verifying Identity

The first line of defense is verifying the identity of any entity attempting to access your API. This ensures that you know who is knocking on your digital door.

API Keys

API keys are perhaps the most common method for API authentication. They are unique strings of characters that are issued to users or applications. When making an API request, the key is typically included in the request headers.

- Generation and Management: Keys should be unique, randomly generated, and long enough to offer sufficient entropy. A key management system is crucial for generating, revoking, and rotating keys. Treat API keys like passwords; they should be kept confidential and never hardcoded directly into publicly accessible client-side code or version control systems.

- Scope and Permissions: Consider defining different levels of access for different API keys. For example, a read-only key might be sufficient for some applications, while others require full read/write capabilities. This principle of least privilege is fundamental.

OAuth 2.0 and OpenID Connect

For more complex scenarios, especially those involving third-party applications or user delegation, OAuth 2.0 and OpenID Connect (OIDC) offer more sophisticated authentication and authorization frameworks.

- OAuth 2.0: This framework allows users to grant third-party applications limited access to their resources on a service without sharing their credentials. The process typically involves obtaining an access token after user authorization.

- OpenID Connect: Built on top of OAuth 2.0, OIDC adds an identity layer, allowing clients to verify the identity of the end-user based on the authentication performed by an authorization server. This provides a standardized way to get information about the authenticated user.

- Token Management: Securely managing tokens (access tokens, refresh tokens, ID tokens) is critical. Tokens should have a defined expiration time, and refresh tokens should be handled with extreme care.

Mutual TLS (mTLS)

Mutual Transport Layer Security (mTLS) provides an additional layer of security by requiring both the client and the server to authenticate each other. This is particularly useful for machine-to-machine communication where both entities are managed.

- Certificate-Based Authentication: Each client and server presents a digital certificate that is verified by the other party. This significantly reduces the risk of unauthorized access by ensuring that only known and trusted clients can connect.

Authorization: Defining Permissions

Once an identity has been authenticated, authorization determines what actions that authenticated entity is permitted to perform. This is about defining what the guest is allowed to do once they are inside the castle.

Role-Based Access Control (RBAC)

RBAC is a common and effective authorization model. Users or applications are assigned roles, and each role is granted specific permissions to access resources or perform actions.

- Principle of Least Privilege: Assign only the necessary permissions for each role. Avoid granting broad access. Regularly review and update role assignments as responsibilities change.

- Granular Permissions: Define permissions at a fine-grained level. For example, instead of granting “all AI model access,” you might grant “access to image recognition model for classification” or “access to text generation model for summarization.”

Attribute-Based Access Control (ABAC)

ABAC offers a more dynamic and flexible approach to authorization. Access decisions are based on a set of attributes associated with the user, the resource, the action, and the environment.

- Dynamic Policies: ABAC allows for policies that can adapt based on context. For instance, a policy might state that a model can only be accessed between certain hours or from specific IP address ranges.

- Complex Scenarios: This model is well-suited for complex environments with a large number of users, resources, and dynamic access requirements.

Access Control Lists (ACLs)

ACLs are lists associated with resources that specify which users or groups are granted access and the type of access (e.g., read, write, execute).

- Resource-Centric: ACLs are tied directly to the resource they protect. While granular, managing ACLs across a large number of resources and users can become complex.

Securing the API Infrastructure and Data Flow

Beyond controlling who can access the API, it’s crucial to secure the underlying infrastructure and the data that flows through it. This involves protecting the tunnels and conduits that connect the outside world to your valuable AI assets.

Transport Layer Security (TLS/SSL)

All API traffic should be encrypted using TLS/SSL. This is the digital equivalent of using a certified courier to transport sensitive documents – the contents are protected during transit.

- HTTPS: Enforce the use of HTTPS for all API endpoints. This encrypts data in transit, preventing eavesdropping and man-in-the-middle attacks.

- Certificate Management: Ensure that your TLS certificates are up-to-date, valid, and issued by a trusted Certificate Authority (CA). Regularly monitor certificate expiry dates to avoid service disruptions.

Input Validation and Sanitization

The data submitted to your API needs to be meticulously checked. Imagine allowing anyone to throw anything through your delivery chute; you need to ensure only valid packages arrive.

- Preventing Injection Attacks: Malicious inputs can exploit vulnerabilities in the API or the underlying model. Validate all incoming data to ensure it conforms to expected formats, types, and ranges. Sanitize any user-provided data to remove or neutralize potentially harmful characters or code.

- Model-Specific Validation: For AI models, this might involve ensuring that input data adheres to the expected format for the model’s training data. For example, an image recognition API should expect image files of specific formats and sizes.

Rate Limiting and Throttling

Protecting your API from abuse and excessive load is essential for availability and cost management. This is akin to having a physical capacity limit for your building to prevent overcrowding.

- Preventing DoS Attacks: Rate limiting restricts the number of requests a user or IP address can make within a specific time period. This helps mitigate denial-of-service (DoS) and brute-force attacks.

- Fair Usage: Throttling can ensure that a few heavy users don’t consume all available resources, providing a more equitable experience for all API consumers.

- Dynamic Adjustment: Consider adjusting rate limits based on user tiers or subscription levels.

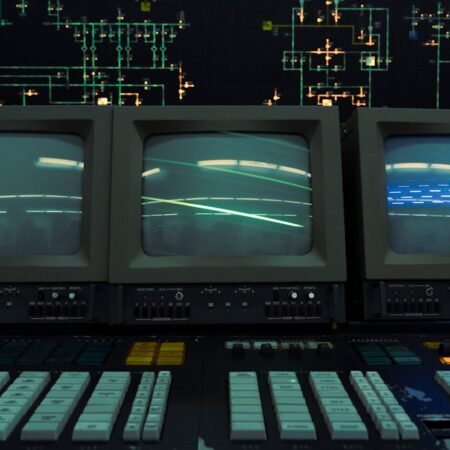

Logging and Monitoring

Comprehensive logging and real-time monitoring are vital for detecting and responding to security incidents. This is your sophisticated surveillance system, keeping watch over all activity.

- Audit Trails: Log all API requests, including the authenticated user, the endpoint accessed, the timestamp, the request parameters, and the response. This creates an audit trail that is invaluable for forensic analysis if a security incident occurs.

- Anomaly Detection: Implement monitoring tools that can detect unusual patterns in API usage, such as sudden spikes in request volume, access from unexpected locations, or repeated failed authentication attempts.

- Alerting: Set up alerts to notify security personnel immediately when suspicious activity is detected.

Protecting the AI Model Itself: Beyond the API Layer

While securing the API is paramount, the protection of the AI model extends beyond the access layer. The model itself, and the data it has learned from, are valuable assets.

Data Security and Privacy

The data used to train AI models, and the data processed by the models, must be handled with the utmost care. This is about protecting the knowledge base that fuels your AI.

- Data Encryption: Encrypt sensitive training data both at rest and in transit. Implement robust access controls for the datasets themselves.

- Data Anonymization and Pseudonymization: Where possible, anonymize or pseudonymize data to protect individual privacy, especially for models trained on personal information.

- Compliance: Ensure compliance with relevant data protection regulations, such as GDPR, CCPA, and others, depending on your location and the nature of the data.

Model Security and Integrity

Preventing modification or unauthorized access to the model weights and architecture is crucial to maintain its trustworthiness and prevent malicious alterations.

- Secure Model Storage: Store trained models in secure, access-controlled environments. Consider employing techniques like model versioning and integrity checks.

- Adversarial Robustness: While often a research area, consider implementing techniques to make your AI models more robust against adversarial attacks. This can involve adversarial training or input filtering.

- Intellectual Property Protection: If your model represents significant intellectual property, employ measures to prevent unauthorized copying or reverse engineering. This can include obfuscation techniques or legal agreements.

Secure Deployment Practices

The environment in which your API and model are deployed must be secure. This is about building a secure house for your AI.

- Containerization and Orchestration: Utilize containerization (e.g., Docker) and orchestration (e.g., Kubernetes) for consistent and secure deployments. These technologies can help isolate environments and manage security configurations.

- Regular Patching and Updates: Keep all underlying infrastructure, operating systems, libraries, and dependencies patched and up-to-date to address known vulnerabilities.

- Network Segmentation: Implement network segmentation to isolate the API and model deployment from other parts of your infrastructure, limiting the blast radius of any potential breach.

- Secrets Management: Use dedicated secrets management tools (e.g., HashiCorp Vault, AWS Secrets Manager) to securely store and manage API keys, database credentials, and other sensitive information. Avoid storing secrets directly in code or configuration files.

Auditing and Continuous Improvement

Security is not a one-time setup; it’s an ongoing process. Just as a city’s defenses are continuously tested and improved, your API security must evolve.

Regular Security Audits

Conduct periodic security audits and penetration testing to identify weaknesses in your API and its security controls.

- Internal Audits: Performed by your own security team.

- External Audits: Engaged third-party security experts to provide an independent assessment.

- Vulnerability Scanning: Utilize automated tools to scan for common vulnerabilities.

Incident Response Planning

Develop and practice a comprehensive incident response plan. What will you do when the unexpected happens?

- Define Roles and Responsibilities: Clearly outline who is responsible for what during an incident.

- Communication Protocols: Establish clear communication channels for internal and external stakeholders.

- Containment, Eradication, and Recovery: Outline steps for containing the breach, removing the threat, and restoring normal operations.

- Post-Incident Analysis: After an incident, conduct a thorough review to understand the root cause and implement measures to prevent recurrence.

Staying Updated with Emerging Threats

The threat landscape is constantly evolving. Stay informed about new vulnerabilities, attack vectors, and security best practices in the AI and API security domains.

- Threat Intelligence: Subscribe to security advisories and threat intelligence feeds.

- Industry Best Practices: Follow recommendations from organizations like OWASP (Open Web Application Security Project), NIST (National Institute of Standards and Technology), and other relevant cybersecurity bodies.

- Community Engagement: Participate in security communities and forums to share knowledge and learn from others.

By implementing these best practices, you can build a robust security posture for your AI model APIs, ensuring that your AI capabilities are accessible to legitimate users while remaining protected from the myriad threats that exist in the digital realm. A well-secured API is like a trusted key that unlocks your AI’s potential safely and effectively.

FAQs

What are AI model APIs?

AI model APIs are application programming interfaces that allow access to artificial intelligence models. These APIs enable developers to integrate AI capabilities into their applications, allowing them to perform tasks such as image recognition, natural language processing, and predictive analytics.

Why is access control important for AI model APIs?

Access control is important for AI model APIs to ensure that only authorized users and applications can access and use the AI models. This helps prevent unauthorized access, misuse, and potential security breaches, protecting the integrity and confidentiality of the AI models and the data they process.

What are best practices for access control and security for AI model APIs?

Best practices for access control and security for AI model APIs include implementing strong authentication mechanisms, such as API keys or OAuth tokens, to verify the identity of users and applications. Additionally, using role-based access control (RBAC) to define and enforce permissions for accessing AI models, and encrypting data in transit and at rest to protect sensitive information.

How can organizations safeguard their AI model APIs?

Organizations can safeguard their AI model APIs by regularly monitoring and auditing access to the APIs, implementing rate limiting and throttling to prevent abuse and denial-of-service attacks, and conducting regular security assessments and penetration testing to identify and address potential vulnerabilities.

What are the potential risks of not properly securing AI model APIs?

The potential risks of not properly securing AI model APIs include unauthorized access to sensitive AI models and data, misuse of AI capabilities for malicious purposes, data breaches and leaks, and damage to the organization’s reputation and trust. Additionally, insecure AI model APIs can lead to regulatory non-compliance and legal consequences.