This article explores the application of reinforcement learning (RL) to automate penetration testing, aiming to enhance the capabilities and efficiency of security teams.

Introduction to Autonomous Penetration Testing

Penetration testing, often referred to as ethical hacking, involves simulating cyberattacks to identify vulnerabilities in systems, networks, and applications. Traditionally, this process is performed by human experts who manually probe systems, analyze results, and report findings. While human ingenuity and adaptability are invaluable, the sheer scale and complexity of modern IT environments, coupled with the increasing sophistication of threat actors, present significant challenges to manual penetration testing. The time-consuming nature of manual testing can lead to significant delays in identifying and remediating vulnerabilities.

The Limitations of Traditional Penetration Testing

Manual penetration testing, despite its effectiveness, faces several inherent limitations:

- Scalability Issues: As organizations grow their digital footprints, the scope of penetration tests expands, making it difficult for manual testers to cover all critical areas within reasonable timeframes. This is akin to a single person trying to inspect every nook and cranny of a sprawling mansion for hidden weaknesses.

- Human Fatigue and Error: Sustained manual testing can lead to fatigue, which in turn can result in missed vulnerabilities or misinterpretations of findings. The human element, while crucial, is not immune to the effects of prolonged cognitive effort.

- Consistency and Reproducibility: Achieving consistent results across different testers and over time can be challenging. Different individuals may employ slightly different methodologies or have varying levels of expertise in specific areas, leading to variations in penetration test outcomes. Reproducing a complex attack chain precisely can also be difficult.

- Cost and Resource Intensity: Highly skilled penetration testers are in high demand, and their services can be expensive. Organizations may have to limit the frequency or scope of their penetration tests due to budget constraints.

- Reactive vs. Proactive: Manual penetration testing is often conducted periodically, making it a more reactive approach. Emerging threats and newly discovered vulnerabilities may go undetected between scheduled assessments.

The Promise of Automation in Cybersecurity

Automation has become a cornerstone of modern cybersecurity operations, from intrusion detection and prevention to security incident response. The goal is to leverage technology to:

- Increase Speed and Efficiency: Automating repetitive tasks frees up human analysts to focus on more complex and strategic security challenges.

- Enhance Accuracy and Consistency: Automated systems can perform tasks with a higher degree of precision and repeatability, reducing the likelihood of human error.

- Improve Scalability: Automated tools can operate at a scale that is impractical for human teams alone, allowing for broader and deeper security assessments.

- Enable Continuous Monitoring: Automation facilitates continuous security testing and monitoring, allowing organizations to identify and address threats in near real-time.

Autonomous penetration testing represents a significant leap in this automation journey, moving beyond predefined scripts to adaptive and intelligent exploration of systems.

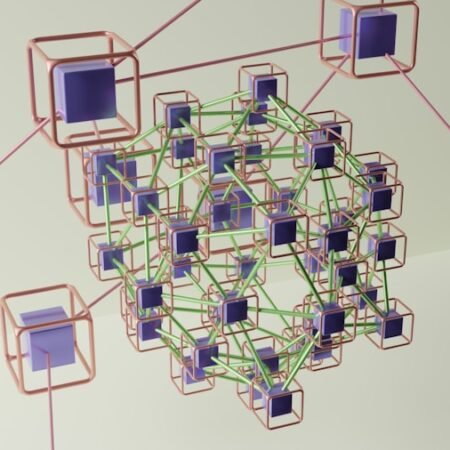

Understanding Reinforcement Learning

Reinforcement learning (RL) is a subfield of machine learning concerned with how intelligent agents ought to take actions in an environment to maximize some notion of cumulative reward. Unlike supervised learning, where data is labeled with correct outputs, or unsupervised learning, which seeks patterns in unlabeled data, RL agents learn through trial and error. The agent interacts with its environment, performs actions, and receives feedback in the form of rewards or penalties. The objective is to learn a policy – a strategy for choosing actions – that maximizes the expected cumulative reward over time.

Core Components of Reinforcement Learning

At its heart, RL involves several key components:

- Agent: The entity that learns and makes decisions. In the context of penetration testing, the agent would be the AI “hacker” tasked with finding vulnerabilities.

- Environment: The system or context within which the agent operates. Here, the environment is the target system or network being tested.

- State: A representation of the current situation in the environment. This could include information about the network topology, open ports, running services, or file permissions.

- Action: A decision made by the agent to interact with the environment. Examples include attempting to exploit a known vulnerability, scanning for open ports, or escalating privileges.

- Reward: A numerical feedback signal from the environment indicating the desirability of an action taken in a particular state. Positive rewards are given for successful discoveries (e.g., gaining access, finding a critical vulnerability), while negative rewards are given for unsuccessful or detrimental actions (e.g., triggering an intrusion detection system, causing a system crash).

- Policy: The agent’s strategy for selecting actions based on the current state. The goal of RL is to learn an optimal policy.

- Value Function: A function that estimates the expected future reward from a given state or state-action pair. This helps the agent evaluate the long-term consequences of its actions.

The Learning Process: Trial and Error

The RL agent learns by iteratively interacting with the environment. Imagine a child trying to navigate a maze. They explore different paths, hitting dead ends (negative reward) or finding exits (positive reward). Over time, they learn which sequences of moves lead to the desired outcome. Similarly, an RL agent for penetration testing will try various attack vectors, learn which ones are effective, and which ones are blocked or benign. This process can be visualized as the agent exploring a vast state-action space, seeking paths that lead to the discovery of vulnerabilities.

Types of Reinforcement Learning Algorithms

Several RL algorithms can be employed, each with its strengths:

- Value-Based Methods (e.g., Q-Learning, Deep Q-Networks – DQN): These algorithms learn a value function that estimates the expected return for each state-action pair. The agent then chooses actions that maximize this estimated value. DQN extends this by using deep neural networks to approximate the value function, enabling it to handle high-dimensional state spaces.

- Policy-Based Methods (e.g., REINFORCE): These algorithms directly learn a policy that maps states to actions. They are often more effective in environments with continuous action spaces.

- Actor-Critic Methods (e.g., A2C, A3C, PPO): These methods combine elements of both value-based and policy-based approaches. An “actor” learns the policy, while a “critic” learns a value function to guide the actor’s learning.

The choice of algorithm depends on the complexity of the environment and the nature of the penetration testing task. For complex cyberattack scenarios, deep reinforcement learning (DRL) techniques that combine deep neural networks with RL algorithms are particularly promising.

Applying Reinforcement Learning to Penetration Testing

The core idea is to train an AI agent to mimic and, in some ways, surpass human penetration testers. The agent is provided with a set of tools and techniques and is tasked with exploring a target system to identify exploitable weaknesses. The RL framework provides a structured way to guide this exploration and reward the agent for successful discoveries.

Defining the Environment and States in a Cybersecurity Context

Translating real-world penetration testing into an RL environment requires careful definition:

- Environment: This can be a simulated network environment (a “cyber range”) designed to mimic production systems, a sandboxed replica of specific applications, or even a carefully controlled section of a live network during a scheduled test. The fidelity of the environment is crucial for the agent’s learning to translate effectively to real-world scenarios.

- States: The state representation needs to capture relevant information about the target system. This could include:

- Network reconnaissance data: IP addresses, open ports, recognized services, operating system versions.

- System configuration: File permissions, user accounts, installed software.

- Application status: Running processes, web server responses, application error logs.

- Previous actions and their outcomes: This allows the agent to learn from its history.

- Output from security tools: The results of vulnerability scanners, credential checks, etc.

The state space can be vast, requiring sophisticated techniques for representation and processing.

Actions: The Penetration Tester’s Toolkit

The actions available to the RL agent are essentially the tools and techniques used by human penetration testers, automated and parameterized:

- Reconnaissance: Port scanning, banner grabbing, OS fingerprinting, DNS enumeration.

- Vulnerability Scanning: Running automated vulnerability scanners against identified services.

- Exploitation: Attempting to leverage known vulnerabilities using exploit code (e.g., from Metasploit).

- Brute-Force Attacks: Attempting to guess passwords for login interfaces.

- Privilege Escalation: Trying to gain higher levels of access once initial access is established.

- Lateral Movement: Exploring other systems on the network once an initial compromise is achieved.

- Data Exfiltration (simulated): Identifying and attempting to access sensitive data.

Each potential action needs to be definable and executable within the RL environment.

Rewards: Incentivizing Vulnerability Discovery

Designing an effective reward function is paramount to guiding the RL agent toward its objective. The reward system should:

- Reward success:

- Gaining unauthorized access to a system (positive reward).

- Discovering a critical vulnerability (e.g., remote code execution, SQL injection) (significant positive reward).

- Successfully escalating privileges (positive reward).

- Identifying sensitive data (positive reward).

- Penalize failure or undesirable actions:

- Triggering an intrusion detection system or firewall (negative reward).

- Causing a system crash or denial of service (significant negative reward).

- Executing actions that yield no useful information (small negative reward or zero reward).

- Wasting computational resources on unproductive probes.

The balance and magnitude of these rewards will shape the agent’s learning trajectory. A well-tuned reward function acts like a compass, guiding the agent through the complex landscape of potential attacks.

Training an Autonomous Penetration Tester

The training process involves:

- Environment Setup: Establishing a secure and realistic cyber range.

- RL Agent Initialization: Defining the agent’s architecture and initial policy, often randomly.

- Exploration and Interaction: The agent interacts with the environment, taking actions based on its current policy and observing the resulting states and rewards.

- Learning and Policy Update: The RL algorithm updates the agent’s policy based on the feedback received, aiming to maximize cumulative rewards. This is where algorithms like DQN or PPO come into play, adjusting the agent’s decision-making process.

- Iteration and Refinement: This cycle of exploration, interaction, and learning repeats for millions or billions of steps, gradually improving the agent’s penetration testing capabilities.

The training can be computationally intensive, requiring significant processing power and time.

Benefits of Autonomous Penetration Testing

The integration of RL into penetration testing offers several distinct advantages that can significantly bolster an organization’s cybersecurity posture. These benefits address many of the limitations inherent in traditional manual approaches.

Enhanced Efficiency and Speed

Automated systems, guided by RL, can perform repetitive tasks and explore attack vectors far more rapidly than human testers. This allows for:

- Faster Vulnerability Discovery: Critical vulnerabilities can be identified and reported much sooner, reducing the window of exposure.

- Increased Testing Frequency: Organizations can conduct penetration tests much more frequently, enabling continuous security assessments.

- Broader Scope: More systems and applications can be tested within the same timeframe, ensuring more comprehensive coverage. This is like having an army of diligent scouts exploring every inch of the battlefield, rather than a single scout painstakingly mapping sections.

Improved Consistency and Reproducibility

RL-trained agents follow deterministic policies (though their exploration can be stochastic). This leads to:

- Standardized Testing: Each test performed by the agent will adhere to the learned optimal strategy, ensuring a consistent approach.

- Reproducible Results: If a vulnerability is found, the agent can, in principle, retrace its steps and reproduce the exploit, aiding in verification and remediation. This removes the variability that can arise from different human testers’ styles or interpretations.

Scalability to Complex Environments

As systems become more interconnected and complex, manually testing them becomes increasingly challenging. RL agents, particularly those integrated with deep learning, can handle high-dimensional state spaces and intricate attack chains:

- Large-Scale Networks: Autonomous testers can navigate and assess vast IT infrastructures, including cloud environments and IoT deployments, efficiently.

- Complex Attack Paths: RL can learn to chain together multiple seemingly minor vulnerabilities to achieve a significant compromise, a capability that can be difficult for humans to discover exhaustively.

Objective-Driven Security Assessments

RL agents are driven by the objective of maximizing their reward function, which is directly tied to success in finding vulnerabilities. This objective focus can lead to:

- Unbiased Assessments: The agent does not have preconceived notions about where vulnerabilities might exist; it explores based on learned patterns and system responses.

- Discovery of Novel Attack Vectors: While trained on known techniques, the exploration inherent in RL can sometimes lead to the discovery of previously uncatalogued attack sequences or exploit chains.

Augmenting Human Security Teams

Rather than replacing human security professionals, autonomous penetration testing aims to empower them.

- Focus on Strategic Tasks: By automating the labor-intensive aspects of testing, human testers can dedicate their expertise to:

- Validating and prioritizing AI-discovered findings.

- Developing more sophisticated defenses.

- Conducting in-depth threat hunting and analysis.

- Designing and overseeing the RL training environments.

- Continuous Security Posture Improvement: The continuous nature of RL-driven testing allows security teams to maintain a more dynamic and adaptive security posture, responding to threats as they emerge rather than during periodic audits.

The agent becomes a tireless digital adversary, constantly probing defenses and providing valuable intelligence that human analysts can then operationalize.

Challenges and Future Directions

Despite the significant potential, the practical implementation of RL for autonomous penetration testing is not without its hurdles. Addressing these challenges will be key to widespread adoption and effectiveness.

Challenges in Real-World Deployment

- Environment Fidelity and Generalization:

- Cyber Ranges vs. Production: Training in simulated environments (cyber ranges) is crucial for safety, but ensuring that the agent’s learned behavior generalizes effectively to real, complex production systems is a significant challenge. The nuances of a live production environment can be difficult to replicate perfectly.

- Dynamic Environments: Real-world systems are constantly changing due to updates, patches, and new deployments. An RL agent trained on a specific system configuration might become less effective as that configuration evolves.

- Reward Function Design and Tuning:

- Defining “Success”: Precisely quantifying the value of discovering different types of vulnerabilities, or the penalty for triggering defenses, can be subjective and difficult to balance. An overly aggressive reward for finding any vulnerability could lead the agent to trigger alarms indiscriminately.

- Avoiding Reward Hacking: Agents can sometimes learn to exploit loopholes in the reward function itself, achieving high scores without truly achieving the intended security objective. This is like a student finding a way to game the grading system without learning the material.

- Computational Resources and Training Time:

- Intensive Training: Training sophisticated RL agents requires substantial computational power (GPUs, TPUs) and significant amounts of time, potentially days or even weeks for complex scenarios.

- Ethical Considerations and Safety:

- Unintended Damage: While designed for testing, poorly trained or misconfigured RL agents could potentially cause actual damage to systems, leading to service disruptions or data loss. Rigorous safety protocols and containment are essential.

- Adversarial Attacks: RL agents themselves could become targets for adversarial attacks, where attackers attempt to manipulate the agent’s learning process or trick it into overlooking vulnerabilities.

- Interpretability and Explainability:

- “Black Box” Problem: Deep RL models can act as “black boxes,” making it difficult to understand precisely why an agent took a particular action or how it discovered a specific vulnerability. This lack of interpretability can hinder trust and make it harder for human analysts to validate findings.

- Integration with Existing Security Workflows:

- Tooling and Orchestration: Seamlessly integrating autonomous testing platforms with existing security operations center (SOC) tools, Security Information and Event Management (SIEM) systems, and incident response workflows requires careful development and implementation.

Future Directions and Research Avenues

The field of RL for cybersecurity is rapidly evolving, with several promising avenues for future development:

- Hybrid Approaches: Combining RL with other AI techniques like knowledge graphs, symbolic AI, or expert systems to enhance interpretability and leverage existing human security knowledge.

- Transfer Learning and Meta-Learning: Developing agents that can quickly adapt to new environments or tasks with minimal retraining, building on knowledge acquired from previous tests.

- Adversarial Reinforcement Learning: Focusing on training agents that can defend against other AI-driven attackers or identify adversarial manipulations within the system.

- Human-in-the-Loop RL: Designing systems where human security experts can closely collaborate with the RL agent, providing guidance, correcting errors, and validating findings in real-time, fostering a more symbiotic relationship.

- Explainable AI (XAI) for RL: Researching methods to make RL decision-making more transparent and understandable to human users, building trust and facilitating effective collaboration.

- Standardized Benchmarks and Datasets: Developing public, realistic, and standardized cyber ranges and datasets to facilitate objective evaluation and comparison of different RL-based penetration testing approaches.

- Ethical AI Frameworks for Cybersecurity: Establishing robust ethical guidelines and regulatory frameworks to govern the development and deployment of autonomous offensive security tools.

The journey towards fully autonomous penetration testing is ongoing, but the integration of reinforcement learning marks a significant stride forward, promising to equip security teams with more intelligent, efficient, and scalable tools to defend against an ever-evolving threat landscape.

Conclusion: Empowering Security Teams for the Future

Autonomous penetration testing, powered by reinforcement learning, represents a paradigm shift in how organizations approach cybersecurity resilience. It moves beyond static, point-in-time assessments to a dynamic, adaptive, and continuous process of threat identification. The ability of RL agents to learn, adapt, and operate at scale offers a potent solution to the challenges posed by increasingly complex and sophisticated cyber threats.

The benefits are clear: faster detection of vulnerabilities, more consistent and comprehensive testing, and the liberation of skilled human security professionals to focus on higher-level strategic tasks. Reinforcement learning acts as a catalyst, transforming the security team’s capacity from that of a vigilant guard watching a fortified wall to an agile defender capable of anticipating and counteracting evolving attackers in a constantly shifting battlefield.

While challenges related to environment fidelity, reward function design, computational resources, and interpretability persist, ongoing research and development are actively addressing these limitations. The future likely lies in hybrid approaches that combine the brute-force exploration and learning capabilities of RL with the critical thinking, contextual understanding, and ethical judgment of human experts.

Ultimately, empowering security teams with autonomous penetration testing capabilities is not about replacing human ingenuity but augmenting it. It is about providing them with intelligent tools that can tirelessly probe defenses, uncover hidden weaknesses, and deliver actionable intelligence. In an era where the speed and sophistication of cyberattacks continue to escalate, embracing such advancements is not merely an option, but a strategic imperative for maintaining robust cybersecurity in the digital age. The proactive and intelligent exploration offered by RL will be a cornerstone in building a more secure digital future.

FAQs

What is reinforcement learning?

Reinforcement learning is a type of machine learning where an agent learns to make decisions by taking actions in an environment to achieve a specific goal. The agent receives feedback in the form of rewards or penalties based on its actions, which helps it learn the best strategies to achieve its objectives.

How can reinforcement learning be applied to autonomous penetration testing?

Reinforcement learning can be applied to autonomous penetration testing by training an agent to simulate the behavior of a malicious attacker and learn how to identify and exploit vulnerabilities in a system. The agent can continuously learn and adapt to new security threats, making it an effective tool for enhancing the capabilities of security teams.

What are the benefits of using reinforcement learning for autonomous penetration testing?

Using reinforcement learning for autonomous penetration testing can help security teams automate the process of identifying and addressing security vulnerabilities, leading to faster response times and improved overall security posture. It can also help in identifying complex attack vectors and improving the resilience of systems against evolving threats.

What are the potential challenges of implementing reinforcement learning for autonomous penetration testing?

Challenges in implementing reinforcement learning for autonomous penetration testing include the need for large amounts of training data, the potential for the agent to learn and execute malicious actions, and the ethical considerations surrounding the use of autonomous agents in security testing.

How can security teams harness reinforcement learning for autonomous penetration testing effectively?

Security teams can harness reinforcement learning for autonomous penetration testing effectively by carefully designing the training environment, ensuring the agent’s actions align with ethical and legal standards, and continuously monitoring and evaluating the agent’s performance to ensure it aligns with the organization’s security goals.